For film and television productions working in documentaries, unscripted television, and news, the adoption of AI powered image and video tools raises important questions. Increasingly, productions are expected to meet modern 4K and high-resolution distribution requirements using legacy, mixed-format, or lower-resolution source material, raising fundamental concerns:

- Will these tools improve quality while preserving the original content’s character, without altering or adding new visual characteristics?

- Where is the boundary between restoration and alteration when legacy media is brought forward for modern delivery?

In nonfiction storytelling, editorial integrity and visual authenticity are foundational. Audiences expect what they see to reflect reality. As a result, adopting AI in professional media requires more than a binary decision to use AI or not use AI. It requires an understanding of how different models behave.

At Topaz Labs, we work closely with post-production teams across broadcast, documentary, archival, and news workflows. One conclusion is consistent. Not all AI models operate the same way, and selecting the right class of model is essential to preserving intent.

This article defines the practical differences between precision enhancement models and generative diffusion models, and explains how each can be applied responsibly in professional media workflows.

Classes of AI Models with Different Goals

Modern image and video enhancement models used in post-production generally fall into three categories: precision models, generative models, and creative models.

- Precision models are designed to improve quality by refining and enhancing visual information already present in the source. They operate directly on existing image structure, texture, and signal characteristics, and are intended to preserve the original content’s character without reconstructing or inferring new visual elements.

- Generative models improve quality by reconstructing or inferring detail and texture based on both the existing visual signal and learned semantic understanding of scenes, objects, and structure.

- Creative models prioritize semantic synthesis over source fidelity and do not attempt to preserve original visual detail. They can be guided by user input or prompts and are commonly used for stylization, look development, and native generative AI content.

Because creative models are designed to reinterpret or invent visual content, they are outside the scope of this discussion.

Understanding these distinctions is critical for nonfiction workflows where authenticity and editorial integrity must be maintained.

.png)

Precision Video Models: Improving Without Changing

For most documentary, non-fiction, and unscripted workflows, precision first enhancement is the preferred approach. Topaz video models such as Proteus, Iris, Artemis and Gaia are designed to make controlled improvements while preserving the original character of the footage.

Precision models are commonly used to upscale and improve footage by:

- Removing fields and pulldown

- Reducing noise and grain

- Repairing compression artifacts

- Improving perceived sharpness and clarity

These models typically expose user adjustable parameters, allowing operators to control the degree of enhancement. This mirrors established film and video workflows and keeps editorial control with the user.

Because precision models refine existing information rather than reconstructing new detail, they are well suited for nonfiction content, including high quality archival material. When input quality is strong, enhancements are subtle. When input quality is poor, these models remain conservative.

%20(1).png)

Generative Video Models: When Reconstruction Is Required

For non-fiction content, generative models serve a different purpose.

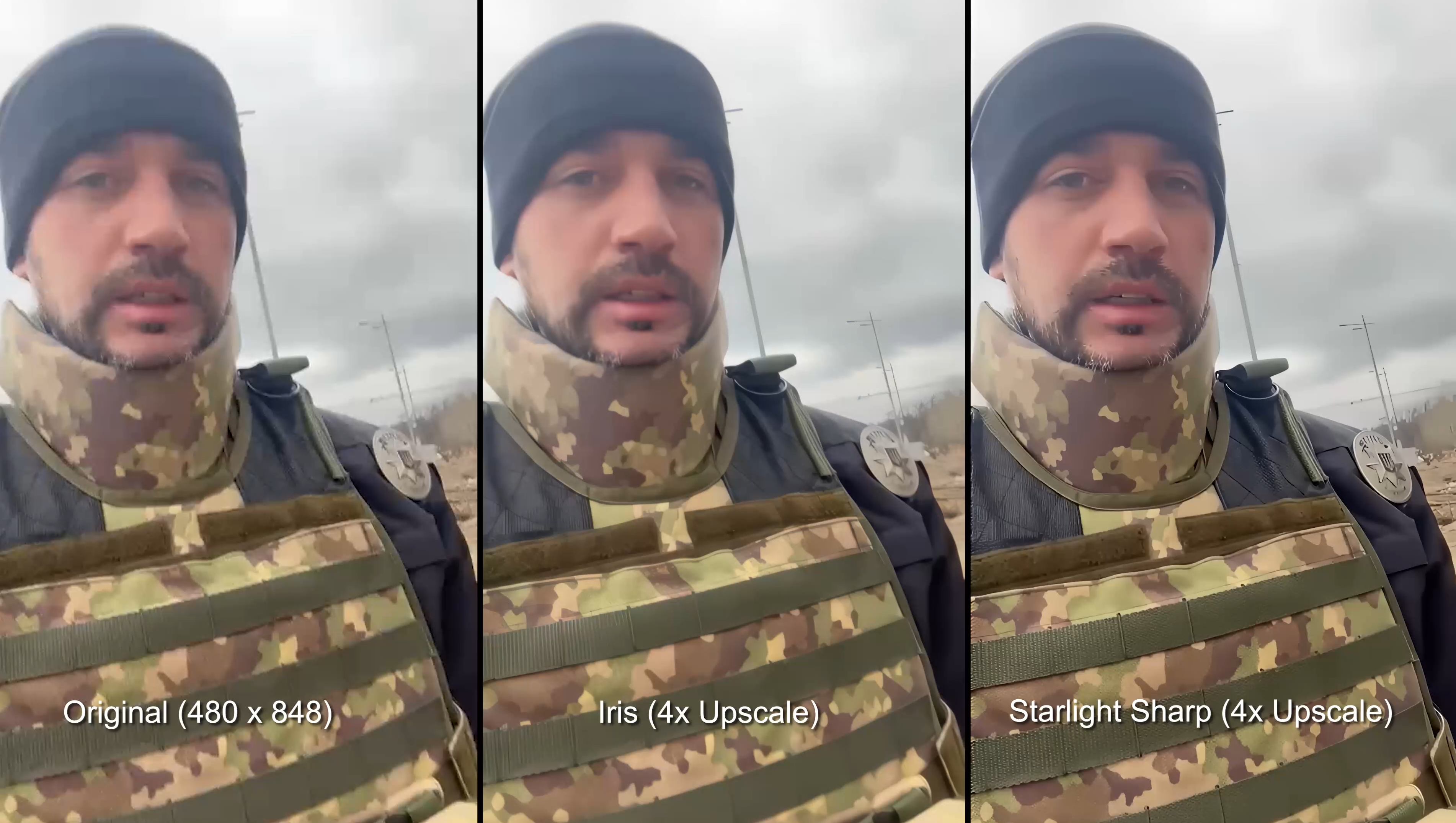

The Starlight family of models are designed for footage that is extremely degraded, heavily compressed, very low resolution, or synthetic in origin. In these cases, refinement alone is insufficient and some level of reconstruction becomes necessary.

Generative models can bring previously indistinguishable people or objects into clearer focus when source material is severely degraded. However, the diffusion process may infer detail or texture that is not explicitly present in the original footage. While Topaz designs these models to be as precise and realistic as possible, generative enhancement remains a reconstructive process and requires informed editorial judgment, particularly in nonfiction workflows.

Variants such as Starlight Precise, Starlight Mini, and Starlight Sharp are designed to add less inferred detail than fully creative generative models. They are commonly used for damaged archival material or for improving native generative AI video prior to professional finishing.

These models operate as automatic processes and do not expose user parameters. While they can produce significant quality gains, depending on the content, they may not be the first choice for critical nonfiction content.

Image Models: The Same Distinction Applies

The same precision versus generative distinction applies to image and photo enhancement.

Precision image models, such as those found in Topaz Gigapixel and Topaz Photo including Standard 2, High Fidelity 2, and Low Resolution 2, are designed to improve resolution and clarity by refining existing visual information while preserving original structure. They rely on familiar controls such as denoising, sharpening, and compression repair.

Generative image models, such as Wonder, Redefine, and Recover, use diffusion-based techniques to reconstruct missing or damaged detail. These models are intentionally more interpretive, although newer variants such as Wonder 2 emphasize higher fidelity and realism for restoration-oriented workflows.

Note: Face Recovery is an optional feature available within certain precision image models that applies localized diffusion-based reconstruction specifically to facial regions. When enabled, this feature introduces generative behavior at the operation level, while the model itself remains classified as a precision enhancement model. Use of Face Recovery is user controlled and should be evaluated based on source quality, editorial intent, and workflow requirements.

Input Quality Still Matters

AI enhancement does not behave independently of the source.

High quality inputs result in subtle refinement. Severely degraded inputs limit what non-generative models can recover and increase the role of reconstruction in generative workflows.

Responsible use begins with an honest assessment of source quality and editorial requirements before selecting a model.

Local Versus Cloud Processing

Processing location is a critical consideration for professional media teams.

Some post-production teams require local, on-premises processing due to security, compliance, or contractual constraints. For these teams, cloud processing may not be permitted.

Precision models are well suited for local workflows and can be integrated into existing post-production pipelines while maintaining full control over media security.

Generative diffusion models are significantly larger and more computationally intensive. As of the time of writing, and with limited exceptions such as Starlight Mini and Starlight Sharp, most generative video models require cloud processing to deliver practical performance and consistent results. As model architectures and hardware capabilities continue to evolve, local execution of diffusion-based models is expected to expand, but processing location remains an important factor in current workflow planning.

.png)

Choosing the Right Workflow for Nonfiction Media

Preserving intent in professional media depends on deliberate choices. For most documentary, news, and unscripted workflows, precision models running locally provide the right balance of quality improvement, editorial control, and compliance. Generative models may be appropriate for restoration, reconstruction, or AI-generated source material when their use aligns with editorial standards and organizational policies.

It is also important to recognize that nonfiction and fictional content operate under different expectations. Fictional film and television have long relied on CGI, visual effects and digital reconstruction as part of the storytelling process, while nonfiction content is held to stricter standards of authenticity.

By offering both precision enhancement models and diffusion models, Topaz enables professional teams to apply AI in ways that respect these distinctions. Responsible use is defined not by whether AI is used, but by how models are selected, applied, reviewed, and disclosed within professional workflows to preserve the original content’s character.

Why Choice Matters

At Topaz Labs, the goal has never been to promote a single approach to AI enhancement, but to give professionals meaningful choice. Topaz develops its models in the United States, with a focus on transparency, control, and the realities of professional post-production. By offering both precision and generative models, and by supporting local, on-premises processing in addition to cloud options, Topaz allows filmmakers and studios to decide how AI fits into their workflows. That flexibility matters in professional media, where editorial standards, security requirements, and creative intent vary widely.

Appendix A: Precision vs Generative AI Models in Professional Media

This appendix provides a high-level comparison of precision and generative AI enhancement models as used in professional image and video workflows. The comparison is intended to support editorial, technical, and compliance evaluation.

Appendix B: Terms and Definitions

Terms are listed alphabetically for reference.

AI Enhancement: The application of machine learning models to improve the quality and resolution of image or video content, including noise reduction, compression artifact repair, sharpening, face recovery, and resolution enhancement.

Editorial Intent: The journalistic or documentary purpose of the original content and how imagery is intended to be interpreted by the audience.

GAN (Generative Adversarial Network): A class of machine learning models composed of two neural networks trained together to optimize output quality. In enhancement workflows, GAN based models are used to refine existing visual information rather than inventing new content.

Generative Diffusion Models: A class of generative machine learning models that reconstruct or infer visual information when source material is severely degraded, low resolution, or incomplete, by iteratively refining noise into structured imagery based on learned statistical patterns. These models incorporate learned semantic representations of objects, scenes, and structure, which enables reconstruction beyond the explicit visual information present in the source.

Generative Reconstruction: The inference or reconstruction of visual information not explicitly present in the original source material.

Original Content Character: The inherent visual attributes of the source material, including structure, texture, motion characteristics, and overall appearance.

Precision Models: AI enhancement models built using Generative Adversarial Network architectures that refine existing visual information without reconstructing or inferring new content.

Appendix C: Model Classification by Enhancement Type

Note: Most Topaz precision image models include an optional Face Recovery feature with Realistic and Creative settings that use diffusion techniques to improve facial clarity, allowing operators to control how much reconstructed facial detail is applied.