Virtual production has shifted the center of gravity in filmmaking. Instead of replacing environments in post, filmmakers are now committing imagery to camera on-set, where CG backgrounds are rendered in real time with Unreal Engine. In this workflow, resolution, stability, and realism are non-negotiable. On an LED volume, every pixel is visible.

That reality came into focus on a recent commercial production overseen by Noah Kadner, a respected voice in the virtual production field whose work includes serving as Virtual Production Editor at American Cinematographer, author of Epic Games’ Virtual Production Field Guide, and founder of VirtualProducer.io.

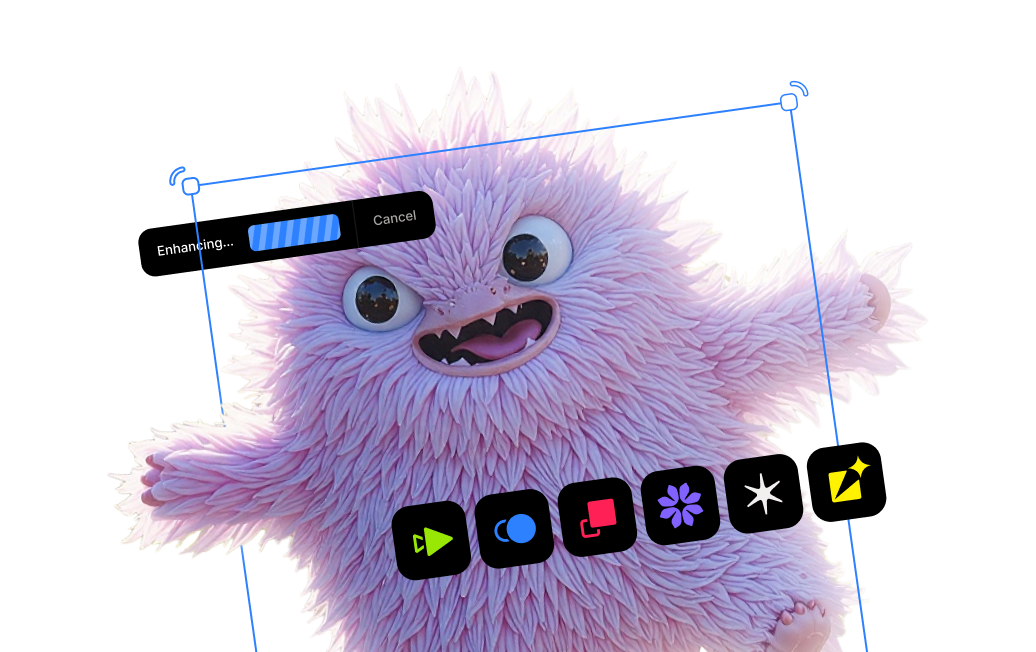

Facing a compressed schedule and an ambitious creative brief, Kadner turned to a native generative AI workflow, using Topaz Video to transform HD sized imagery into camera ready 15K backplates.

A Virtual Production Test Bed in Mexico

The project was produced on an LED stage operated by Simplemente, a Mexico City based production and technology company with deep roots in post-production, systems integration, and camera workflows. The facility has hosted episodic series, commercials, and high-volume productions and functions as a constant laboratory for Kadner. For nearly three years, the stage has been used weekly, allowing Kadner to evaluate emerging virtual production and AI tools under real production pressure rather than controlled demos.

“It’s one thing to test technology in theory,” Kadner notes. “It’s another to rely on it when you’re shooting every week and clients are standing on stage.”

Production on an Accelerated Timeline

“When I first saw the storyboards, I struggled to imagine how it could be accomplished with VP or CG alone,” Kadner says. “There wasn’t enough time or budget to dress and shoot period-accurate plates, nor create them in Unreal Engine or via CG, even in post.”

Traditional driving plates would have required permits, camera arrays, stitching, and weeks of turnaround. Fully synthetic environments carried similar constraints. Instead, Kadner and his team leaned into generative AI to create full driving plates quickly. Using tools including Higgsfield, Kling, NanoBanana, and ComfyUI, they generated anamorphic background plates designed specifically for projection on an LED volume.

“We poured our efforts into generating realistic driving plates via AI,” Kadner explains. “We got so good at it that we were able to do it live on the production day, in between takes.”

“Being able to load a preset in Topaz Video, hit render, and know exactly what you’re going to get really matters. We were literally betting the shoot on it.”

A Practical Breakthrough for In-Camera VFX

Running locally on both Mac and Windows systems, Kadner’s team used Topaz Video to render ultra-high-resolution ProRes HQ outputs directly on set, eliminating cloud transfer latency and enabling rapid iteration while the crew waited.

“As someone who’s seen opticals evolve to digital compositing, green screen to virtual production, I can’t overstate how much of a game changer AI is for in-camera VFX,” Kadner says. “You can get what you want, live, in camera, and almost instantly.”

Just as important, he adds, is that this approach preserves traditional craft. “There’s a way to leverage AI that brings all the crew and their experience along for the ride. This project wouldn’t have happened without it.”

Topaz Labs in the Virtual Production Workflow

Generative AI is reshaping how imagery is created, but resolution and reliability remain limiting factors for virtual production. By rendering locally and enabling fast, dependable upscaling to extreme resolutions, Topaz Video helps bridge that gap, turning generative concepts into camera-ready reality on modern LED stages.