At the USC Entertainment Technology Center (ETC@USC), filmmakers and technologists pressure test emerging tools within real production workflows.

For The Bends, a short film created entirely from native generative AI sources, the ETC partnered with Topaz Labs to answer a practical question. Can AI-generated footage survive a professional VFX and color pipeline and be finished for theatrical exhibition, including a 35mm film print? This project was not about novelty. It was about whether AI imagery could meet real finishing standards.

What the ETC Does

The ETC operates at the intersection of industry, education, and applied research. Rather than speculative demos, it uses real productions to validate workflows studios can trust. ETC Executive Producer Tom Thudiyanplackal describes the goal simply:

“Our job at the ETC is to use short films as a proof of concept. We explore where emerging technologies genuinely extend filmmaking and where they still fall short, albeit without the pressure of commercial delivery.”

A Generative Workflow Structured Like Animation

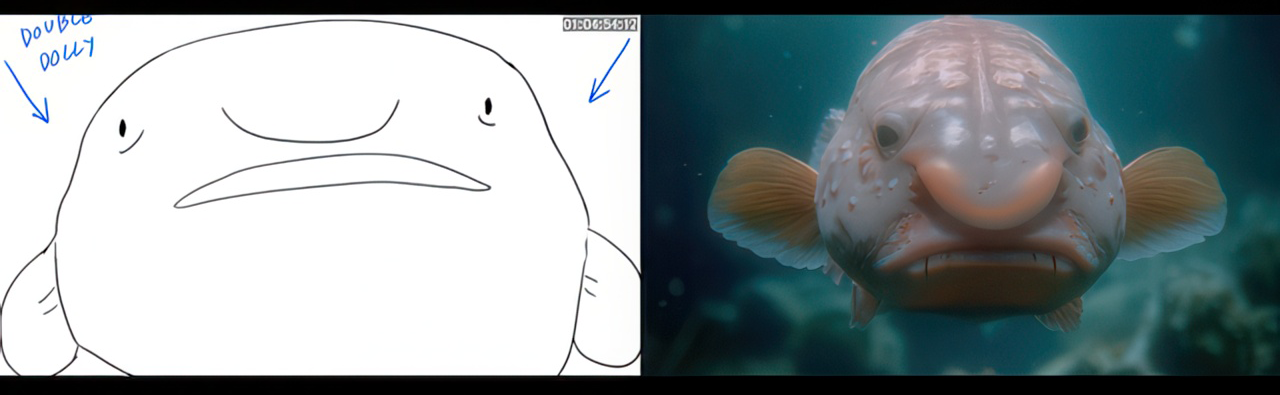

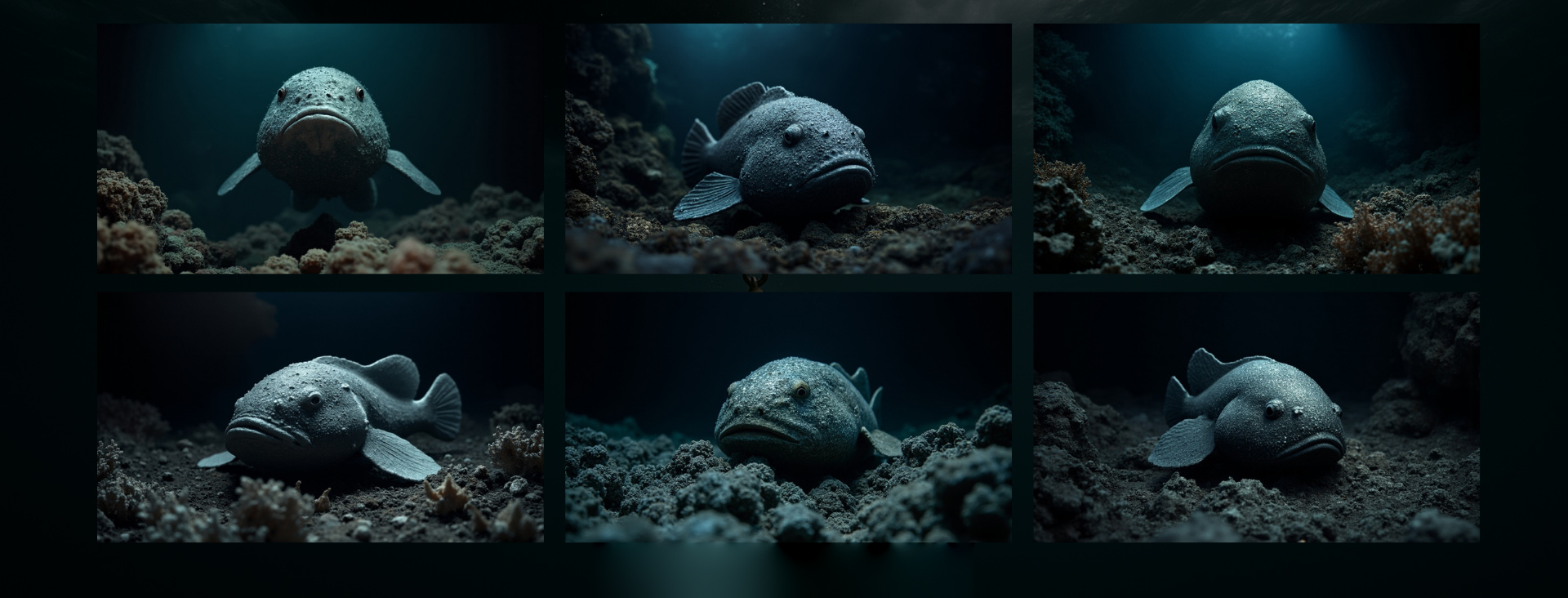

Directed by filmaker Tiffany Lin, The Bends followed an animation-style approach, even though no traditional animation or live-action was used.

Every shot was storyboarded and timed before generation began. Those boards functioned like an animatic, providing structure for AI generation. Prompts were treated as shots rather than general descriptions, helping preserve intent and continuity across sequences.

To maintain consistency at scale, the ETC combined diffusion-based image and video models with ControlNets for framing, LoRAs trained on custom datasets for character and environment consistency, and synthetic data to define a unified visual language, with storyboard images further edited and composited in Photoshop; this project was completed prior to the release of Nano Banana, and several LoRA-based consistency workflows used at the time are now streamlined or handled natively by newer technologies like Nano Banana, significantly reducing setup time while improving reliability.

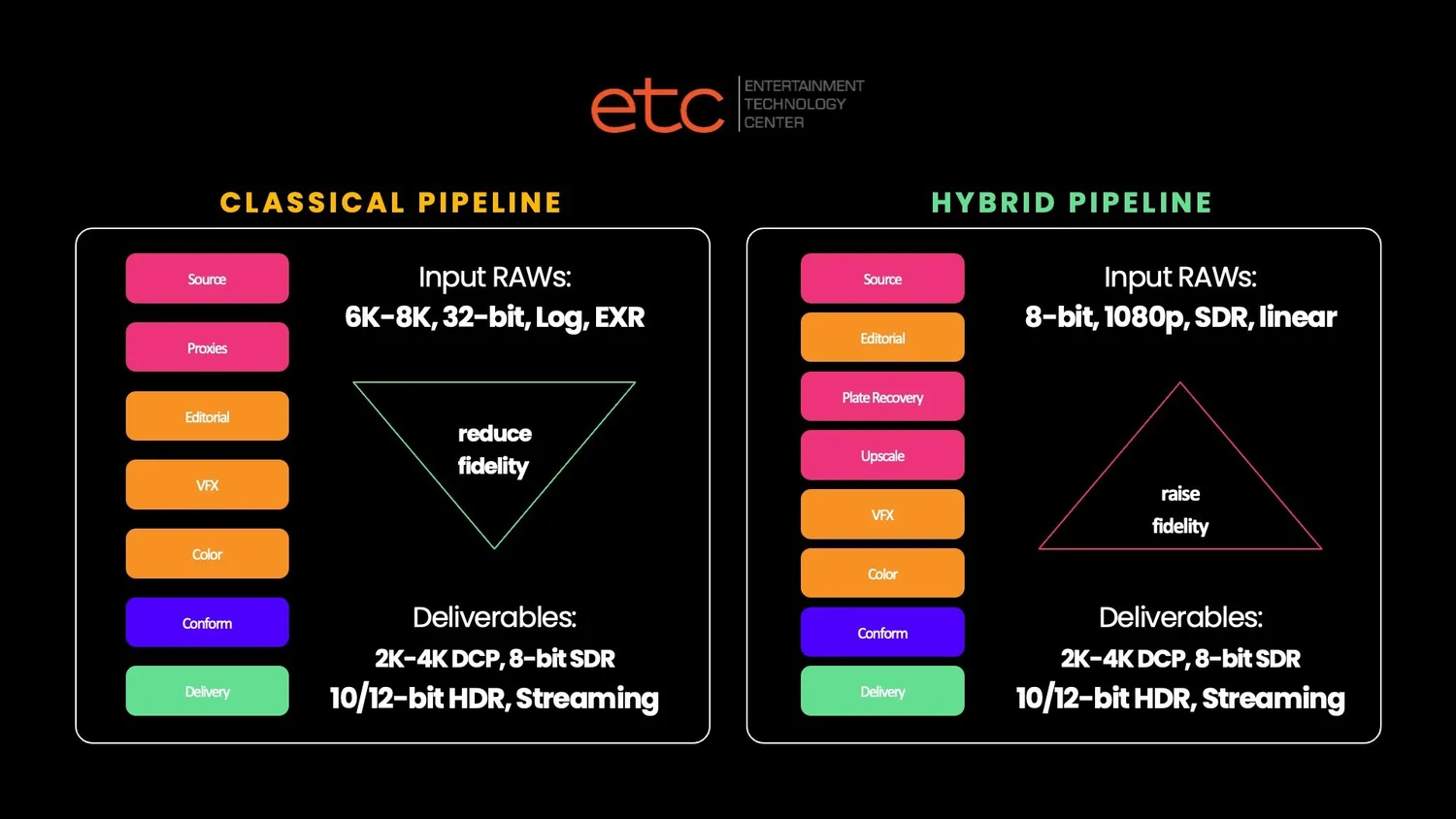

The Core Challenge: AI Inverts the Film Pipeline

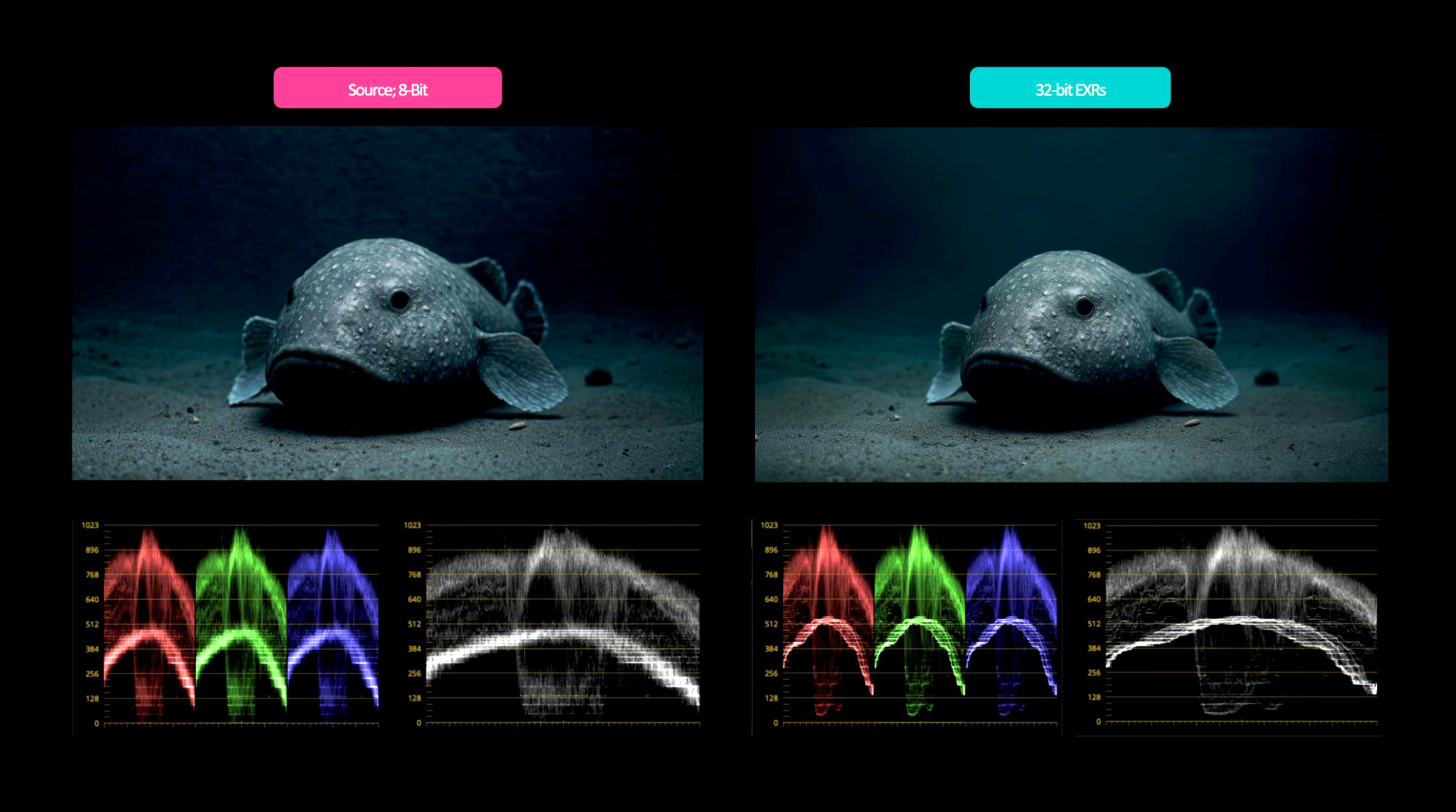

Most generative video tools output 8-bit, compressed SDR media. These formats may work online but fail quickly in professional environments.

- Banding and compression artifacts become obvious on large screens

- Limited bit depth collapses under grading and VFX

- Professional pipelines expect high precision EXR media

In traditional filmmaking, image quality flows downhill from camera to delivery. With generative AI, that process is inverted. Fidelity must be rebuilt after each generation.

The ETC illustrates this with charts showing how AI production flips the conventional pipeline, forcing post-production to reconstruct robustness that normally exists at capture.

Post-production and AI workflow development were led by Sahil Lulla, Head of Innovation at Bitrate Bash and AI Post-Production Research Head on The Bends at the ETC. His approach treated native AI outputs the same way a VFX facility would treat compromised plates.

“We started with an 8 bit SDR file, which is almost like your proxy,” Lulla explains. “You have to raise fidelity through each downstream step.”

To do that, Lulla worked with Erasmo Romero from the Topaz team to develop a three step enhancement workflow using Topaz Video models.

The Three Step Solution: Topaz Video Models in Sequence

• Step 1: Astra (Precise, Quality 4K)

Native GenAI footage was first processed with the Starlight precise model in Astra to improve base resolution and restore detail.

• Step 2: Nyx (Manual Mode)

The output was then processed in Topaz Video using the Nyx model in Manual mode, with all controls set to zero except Reduce Compression at 100. This step targeted banding and macroblocking artifacts without altering the image structure.

• Step 3: Gaia HQ (EXR Output)

Finally, the cleaned footage was processed with Gaia HQ, with output set to image sequences in EXR format, moving the material into a 32-bit EXR working space suitable for professional VFX and color workflows.

Editor’s Note: This workflow was designed to increase spatial resolution and bit depth while preserving original source characteristics, moving native GenAI footage into a 32-bit EXR container without adding or hallucinating detail.

“Topaz helped us cover the last mile, turning fragile AI footage into something that could resiliently survive the rigorous push and pull of industry grade post-production.” - Tom Thudiyanplackal, ETC@USC

Back Into a Traditional Pipeline

Once upscaled and brought into 32-bit EXRs, the footage entered a conventional post pipeline using tools like Adobe After Effects, Nuke, Avid Media Composer and DaVinci Resolve.

- Shot based VFX compositing

- Added lensing effects and chromatic aberrations

- Color grading and synthetic film emulsion

File Journey:

- File format progression: 720p H.264 → cleaned 8-bit → 4K 32-bit EXR → 16-bit HDR EXR

- Color space journey: sRGB (35.9% spectrum) → Rec.709 → Rec.2020 (75.8% spectrum)

- Professional compatibility: Final files suitable for DaVinci Resolve, Avid, and other cinema workflows

Why This Matters

The Bends makes one thing clear. Generative AI can accelerate content creation, but delivering a 4K cinema-quality viewing experience still depends on structure, control, and finishing discipline. By pairing the ETC’s production rigor with Topaz’s production-ready upscaling and enhancement tools, the team showed how native GenAI video can be elevated for professional post workflows, including visual effects and finishing.

As the ETC continues to explore longer-form storytelling, higher dynamic range delivery, and deeper AI integration across professional post pipelines, projects like The Bends point toward what comes next.