Outline

- Step 1: Generating consistency with Higgsfield.ai

- Step 2: Extracting stills from videos to use as new starting points

- Step 3: Generating new videos from extracted still images

- Step 4: Stitching everything together

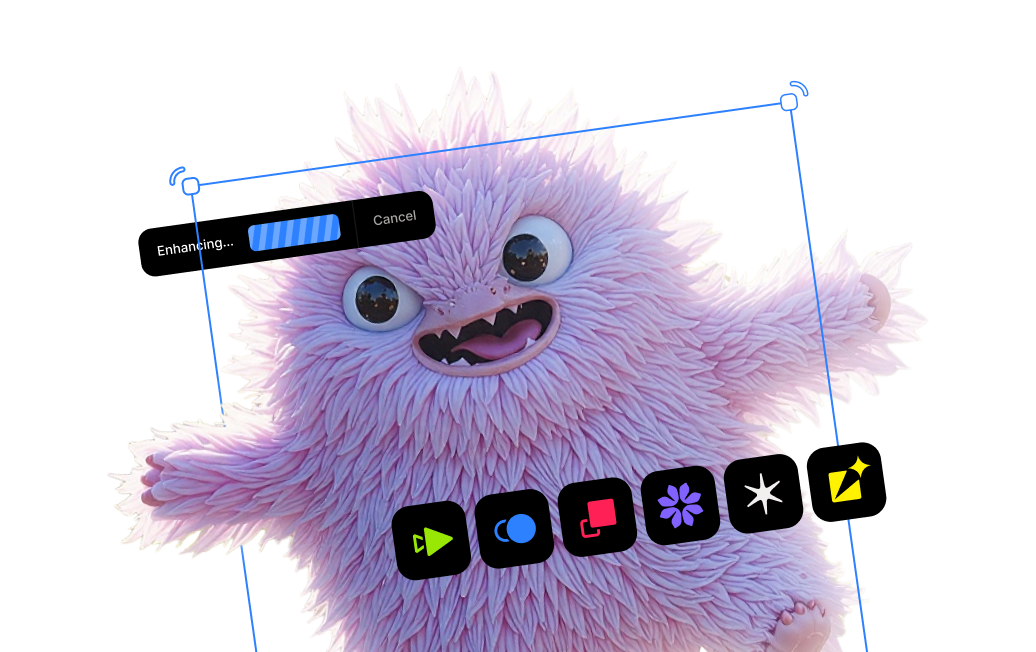

Not too long ago, our team had a breakthrough where we turned our idea of a fantasy character into reality with the help of certain tools such as Topaz Bloom and Astra. We came away feeling energized, but we also felt the looming presence of our next big challenge: How do we take that one great shot and continue building scenes around this same character without losing the visual style and feel that makes him unique?

If you’ve used any number of the exciting new generative AI video platforms out there, you’ll know that while they’re great at generating new characters, details, and scenes, maintaining visual and stylistic continuity can be a big challenge. This became the central problem we had to solve if we were going to expand upon the narrative from that first video clip.

Our next goal was to create a new scene featuring the fire mage, but from a different angle rather than just straight on. If you remember, our original image (enhanced with the help of Bloom) was of the fire mage facing us straight-on, and the video derived from that was also from that same angle. Here’s a reminder:

Our team dove in, testing a whole slew of generative video AI platforms. While the results we got varied widely from slightly amusing to truly horrifying, we couldn’t manage to achieve the look and feel we were after.

One of the most difficult technical hurdles we faced was generating different views of a character that actually felt like the exact same character. It’s surprisingly hard to get a side-profile or a rear view without the AI producing something that feels warped or off-model. Even when we manage to generate something fairly consistent, the AI would add something extra that we didn’t want.

However, after hours of trial and error, we started to achieve some very interesting results from somewhere entirely unexpected.

Higgsfield.ai is a generative video platform that is designed with content creators and creative professionals in mind. At first glance, it appeared to cater to social media influencers and content creators - not quite fitting for us. However, we forged ahead.

%20(1).png)

We spent a good while poking around and exploring, but we ended up navigating to the "Create" -> “Video” section of the app. This was where things got interesting for us.

.png)

Here, we’re able to upload a single image for use as a starting point for our video - similar to the process we followed with Midjourney previously. In addition, we are able to add a text prompt as well as select what Higgsfield calls a “preset”. Here’s a sampling of just a few of the dozens of presets we saw available:

%20(1).png)

From our tests, a preset is a predetermined set of camera movements and visual effects that are applied to the base image in order to generate a short 3- or 5-second video clip. We found that by using a preset, a text prompt isn’t required although there is a feature that creates one for you based on your uploaded image and preset chosen.

Beyond that, you’re able to select which model you’d like to use to generate the video. In addition to the Higgsfield native AI models (ranging from Lite to Turbo), we saw Google Veo 3 and Hailuo models available as well. For now we stuck with the Higgsfield models.

We noticed that just one image was needed to begin generating videos, with the option to add another image to use as the end frame (the end frame option is available only with certain models).

%20(1).png)

We uploaded our original fire mage image to use as our starting point and didn’t add anything for an optional end frame. For presets, we found one called “Arc Right” which featured camera movement partially orbiting the subject’s face - exactly what we were looking for. With these settings, we ended up with the following video clip after a few minutes to finish rendering:

Our team was impressed with this result: by using just a single reference image and no additional text prompt, we generated a realistic, roughly 90-degree camera orbit of our fire mage. We were pleased to see the new video had successfully preserved all the intricate details we cared about—his armor, his intense gaze, and the overall dark fantasy aesthetic. Best of all, this was unmistakably the same character as the one we started off with.

While we were impressed by the resulting video, we were even more excited for what the video contained: a plethora of still images just waiting to be plucked out and used in other generative applications.

Using a free frame extraction tool (we used one called Sharp Frames), we took a few minutes to scroll through the newly generated clip to select the exact angle we wanted. We settled on this one:

We loved the small embers of flame as well as the new perspective. But the best part? This new still image flawlessly matched the style and quality of our initial one. It didn't feel like a separate asset—it definitely felt like a natural camera movement within the same scene.

We knew this high-quality and stylistically consistent frame was the perfect foundation for the next scene our team had been planning.

Next, we wanted to create a transition scene with dramatic flair. This extracted side-view frame will do nicely as the new starting point for the next scene we have in mind.

Going back to Higgsfield, we went to create another video and uploaded this new image to use as a starting point. This time, we found a different preset called “Eyes In” that had the camera zoom into the subject’s eye.

After processing for a few minutes, we ended up with this:

Again, we were impressed with the stylistic continuity captured in this video. Nothing felt out of place - no odd details, and no strange movements.

By leveraging this workflow—Video to Frame, Frame to New Video—our team can construct a complete, visually consistent narrative. It's an iterative process that gives us an incredible amount of creative control, allowing us to direct our own AI-powered animations one high-quality and continuous scene at a time.

We’ve now got two new scenes: a partial orbiting shot, and one that zooms into his eye. All that remains is to combine them together into one seamless shot.

Since our “Eyes In” video is derived from a frame from the original “Arc Right” video, we can seamlessly combine both clips into one.

We opened up Adobe Premiere (any video editing software should do just fine) and got started.

.png)

.png)

If you recall the Arc Right video we generated, the last 2 seconds had our fire mage's face partially obscured by his flowing red cloak. While it's a cool effect, it's not part of what we had in mind. Fortunately, the still we extracted from that video was before those last 2 seconds, so that part won't be included in our final output.

We spliced the two clips together while trying to make the transition between the two scenes as seamless as possible. We made a few tweaks including minor color correction for the red cloak and adjusting the timing of the eyes-in zoom effect, but otherwise it was fairly straightforward. Here is the final product:

Considering that this video clip was completely derived from a single image, we were pleased with the progress we were making on bringing our character to life. Newer, bigger ideas began to take shape as we mulled over what would come next.

We’re hoping you’re as curious as us about what’s around the corner for our fire mage. Stay tuned for the next update!